Let’s Talk about Meetings

A paper agenda outlining a month full of meetings

Years ago, well before the AI meeting summary feature, I recall a tool that allowed the audience to give real-time feedback on meetings and presentations. The technology argument writes itself:

Meetings are about experiences,

Gather moment-to-moment feedback on those experiences, and

Use the data to evaluate/optimize meetings.

Throughout the course of the meeting, participants could "input" POSITIVE and NEGATIVE engagement scores, which would be immediately visible to the person running the meeting. Based on these inputs, that person would change behaviours in order to avoid the NEGATIVE and foster POSITIVE responses. It sounds simple, but like all human interactions, simple does not mean easy.

Of the many potential problems is that of distinguishing between POSITIVE and NEGATIVE behaviours. For example, we may observe Person A ask Person B a question to which Person B is unable to respond.

Was the question unfair (e.g. a NEGATIVE score for Person A for bringing in an inappropriate question)?

Does this show a lack of sufficient preparation (e.g. a NEGATIVE for Person B for insufficient meeting preparedness)?

Was the question a catalyst for discussion (e.g. a double POSITIVE scores: Person A, for starting an important conversation; AND Person B for responding without trying to “answer” the question so as to stifle the conversation)?

Prior interactions between the two (i.e. history) and surrounding actions (i.e. context) will play a role, but such moments can be used to reinforce collectively desirable behaviours. Do we want our people to:

Say, "I don't know" rather than skating or parrying

Accept, "I don't know" rather than exposing the ignorance or ignoring complexity

Heed direction on required pre-work and role expectations

Intervene appropriately to fulfill the meeting objectives

How does this sound?

We borrow the "keynote listener" tactic from my friend Jennifer LaTrobe and have someone "score" the meeting based on pre-determined behaviours, both desired and not.

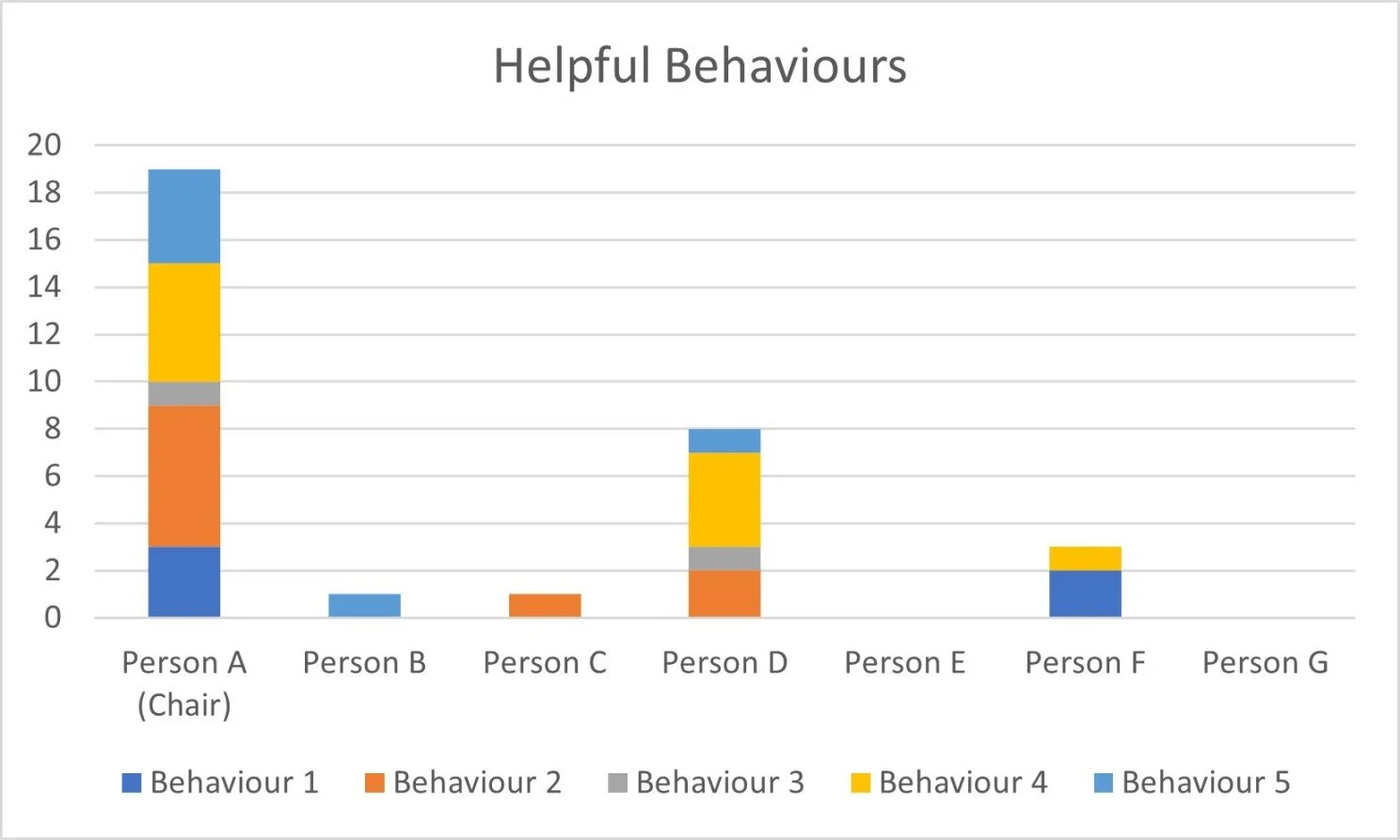

Vertical axis: Instances of desirable behaviours observed by the keynote listener in a meeting (Example)

Vertical axis: Instances of undesirable behaviours observed by the keynote listener in a meeting (Example)

As much as possible, we find and explain the specific context of the behaviour, so rather than listing "Interrupting" as a behaviour to avoid, we would use, "Interrupting without offering a chance to rebutt." Similarly, we may identify "pausing to invite contributions" as a behaviour that one demonstrates by not doing anything.

To that point, "air time" in meetings can be easily quantifiable, but requires savvy interpretation to assess impact on collaboration.

Consider these accounts:

Meeting I

MEETING I Speaking Time Data

Meeting II

MEETING II Speaking Time Data

Meeting III

MEETING III Speaking Time Data

Factual data has the Chair speaking between two-thirds (Meeting II) and one-tenth (Meeting III) of the time. Is one of those better than the other? Person E did not contribute noticeably in any of the meetings. Is this a problem?

Answering these is like commenting on the fact that a car was going 87 km/h. Whether on a divided highway in great weather or in a school zone at 3PM on school day gives very different judgements.

As in all complex environments (like keeping a person healthy or creating psychological safety in workplace interactions), data provides an objective beginning to a valuable conversation.

Do people resist challenging perspectives in meetings (at the expense of wiser decisions)?

Do we allow some to dominate (at the expense of other valuable perspectives)?

Rather than report card of whether or not you pass the collaboration exam, this is a 12-point check-up to see if/where we can put our collaboration attentions and intentions.

NOTE: If this was not clear, if you need a keynote listener, please get in touch!